Let’s do a problem from Chapter 5 of All of Statistics.

Suppose . Let

. Find the limiting distribution of

.

Note that we have

Recall from Theorem 5.5(e) that if and

then

.

So the question becomes does so that we can use this theorem? The answer is yes. Recall that from Theorem 5.4(b)

implies that

. So if we can show that we converge to a constant in probability we know that we converge to the constant in distribution. Let’s show that

. That’s easy. The law of large numbers tells us that the sample average converges in probability to the expectation. In other words

. Since we are told that

is i.i.d from a Uniform(0,1) we know the expectation is

.

Putting it all together we have that:

(through the argument above)

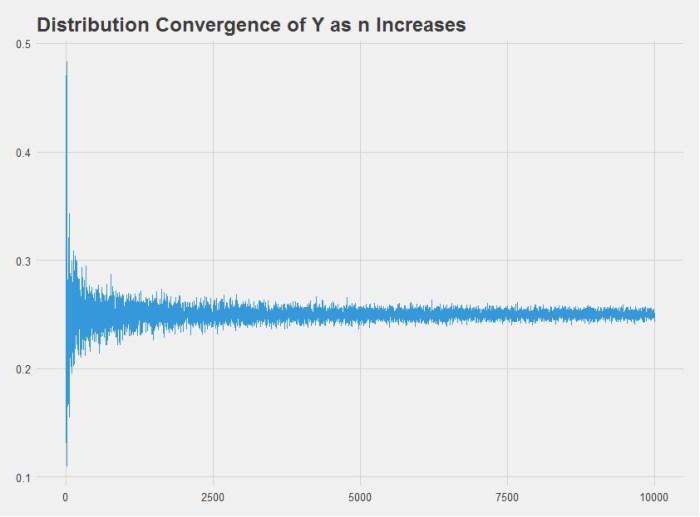

We can also show this by simulation in R, which produces this chart:

Indeed we also get the answer 0.25. Here is the R code used to produce the chart above:

# Load plotting libraries library(ggplot2) library(ggthemes) # Create Y = g(x_n) g = function(n) { return(mean(runif(n))^2) } # Define variables n = 1:10000 Y = sapply(n, g) # Plot set.seed(10) df = data.frame(n,Y) ggplot(df, aes(n,Y)) + geom_line(color='#3498DB') + theme_fivethirtyeight() + ggtitle('Distribution Convergence of Y as n Increases')